Run a program

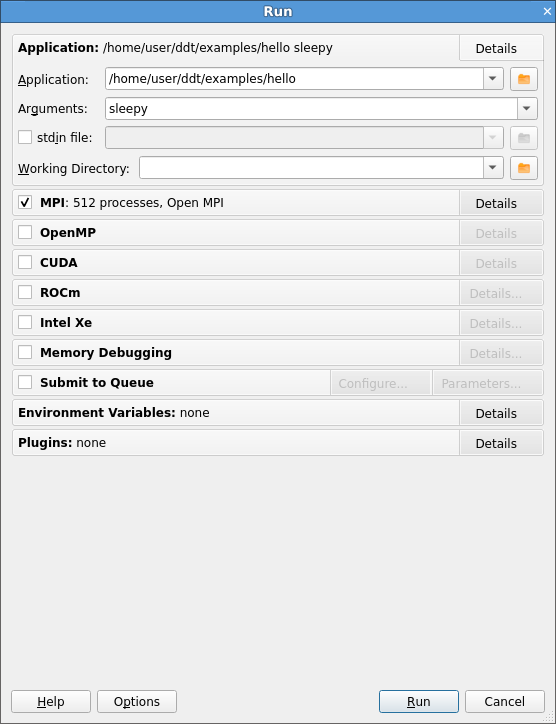

When you click Run on the Welcome page, the Run window displays.

The settings are grouped into sections. Click Details to expand a section.

Application

- Application:

The full file path to your application. If you specified one on the command line, this is automatically filled in. You can browse and select your application.

Note

Many MPIs have problems working with directory and program names that contain spaces. We recommend that you do not use spaces in directory and file names.

- Arguments (optional):

The arguments passed to your application. These are automatically filled if you entered some on the command line.

Note

Avoid using quote characters such as

’and", as these may be interpreted differently by Linaro DDT and your command shell. If you must use these characters but cannot get them to work as expected, contact Forge Support.- stdin file (optional):

This enables you to choose a file to be used as the standard input (

stdin) for your program. Arguments are automatically added tompirunto ensure your input file is used.- Working Directory (optional):

The working directory to use when debugging your program. If this is blank then Linaro DDT’s working directory is used instead.

MPI

Note

If you only have a single process license or have selected none as your MPI Implementation, the MPI options will be missing. The MPI options are not available when Linaro DDT is in single process mode. See Debug single-process programs for more details about using Linaro DDT with a single process.

- Number of processes:

The number of processes that you want to debug. Linaro DDT supports hundreds of thousands of processes but this is limited by your license.

- Number of nodes:

This is the number of compute nodes that you want to use to run your program.

- Processes per node:

This is the number of MPI processes to run on each compute node.

- Implementation:

The MPI implementation to use. If you are submitting a job to a queue, the queue settings will also be summarized here. Click Change to change the MPI implementation.

Note

The choice of MPI implementation is critical to correctly starting Linaro DDT. Your system will normally use one particular MPI implementation. If you are unsure which to choose, try generic, consult your system administrator or Forge Support. A list of settings for common implementations is provided in MPI distribution notes and known issues.

Note

If the MPI command you want is not in your

PATH, or you want to use an MPI run command that is not your default one, you can configure this using the Options window (See System on Optional configuration).- mpirun arguments (optional):

The arguments that are passed to mpirun or your equivalent, usually prior to your executable name in normal usage. You can place machine file arguments here, if necessary. For most users this box can be left empty. You can also specify arguments on the command line (using the

--mpiargscommand-line argument) or using theFORGE_MPIRUN_ARGUMENTSenvironment variable if this is more convenient.Note

You should not enter the

-npargument as Linaro DDT will do this for you.Note

You should not enter the

--task-nbor--process-nbarguments as Linaro DDT will do this for you.

OpenMP

- Number of OpenMP threads:

The number of OpenMP threads to run your application with. The

OMP_NUM_THREADSenvironment variable is set to this value.

For more information on debugging OpenMP programs see Debug OpenMP programs.

CUDA

If your license supports it, you can also debug GPU programs by enabling CUDA support. For more information on debugging CUDA programs see NVIDIA GPU debugging.

- Track GPU Allocations:

Tracks CUDA memory allocations made using

cudaMalloc, and similar methods. See CUDA memory debugging for more information.- Detect invalid accesses (

memcheck): Turns on the

CUDA-MEMCHECKerror detection tool. See CUDA memory debugging for more information.

Note

Detect invalid accesses (memcheck) is not supported with CUDA 12.

Note

Debugging applications using more than one GPU technology (CUDA, ROCm or Intel Xe) is not supported.

ROCm

If your license supports it, you can also debug GPU programs by enabling ROCm support. For more information on debugging ROCm programs see ROCm GPU debugging.

Note

Debugging applications using more than one GPU technology (CUDA, ROCm or Intel Xe) is not supported.

Intel Xe

If your license supports it, you can also debug GPU programs by enabling Intel Xe support. For more information on debugging Intel Xe programs see Intel Xe GPU debugging.

Note

Debugging applications using more than one GPU technology (CUDA, ROCm or Intel Xe) is not supported.

Memory debugging

Click Details to open the Memory Debugging Options window.

See Memory debugging options for details of the available settings.

Environment variables

The optional Environment Variables section should contain additional environment variables that should be passed to mpirun or its equivalent. These environment variables can also be passed to your program, depending on which MPI implementation your system uses. Most users will not need to use this section.

Note

On some systems it may be necessary to set environment variables for the Linaro DDT backend

itself. For example, if /tmp is unusable on the compute nodes you may want to

set TMPDIR to a different directory. You can specify such environment variables in

/path/to/forge/lib/environment. Enter one variable per line and separate the

variable name and value with =. For example, TMPDIR=/work/user.

Plugins

The optional Plugins section lets you enable plugins for various third-party libraries, such as the Intel Message Checker or Marmot. See Use and write plugins for more information.

Run the program

Click Run to start your program, or Submit if working through a queue (see Integration with queuing systems). This runs your program through the debug interface you selected and allows your MPI implementation to determine which nodes to start which processes on.

Note

If you have a program compiled with Intel ifort or GNU g77 you may not see your code

and highlight line when Linaro DDT starts. This is because those compilers create a pseudo

MAIN function, above the top level of your code. To fix this you can either open

your Source Code window, add a breakpoint in your code, then run to that breakpoint,

or you can use the Step into function to step into your code.

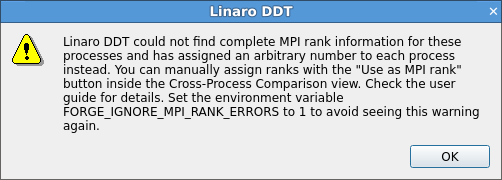

When your program starts, Linaro DDT attempts to determine the MPI world rank of each process. If this fails, an error message displays:

This means that the number Linaro DDT shows for each process may not be the MPI rank of the process. To correct this you can tell Linaro DDT to use a variable from your program as the rank for each process.

See Assign MPI ranks for details.

End the session

To end your current debugging session, select . This closes all

processes and stops any running code. If any processes remain you might

have to clean them up manually using the kill command, or a command

provided with your MPI implementation.